DATASETS

A dataset consisting of multi-modal sensing features. This publicly available dataset consists of 450 seconds of audio, visual, and force/torque data acquired on actual trees.

Using the hand-held probe device, we collected contact interaction data on Eugenia Uniflora and Ilex Verticillata trees planted across the campus of Carnegie Mellon University. We mainly used lateral motion to gently swipe the device against the plants in a similar manner to best maintain consistency across contact interactions. Each trial contains approximately a minute duration of contact interaction with only one type of object class: leaf, twig, or trunk.

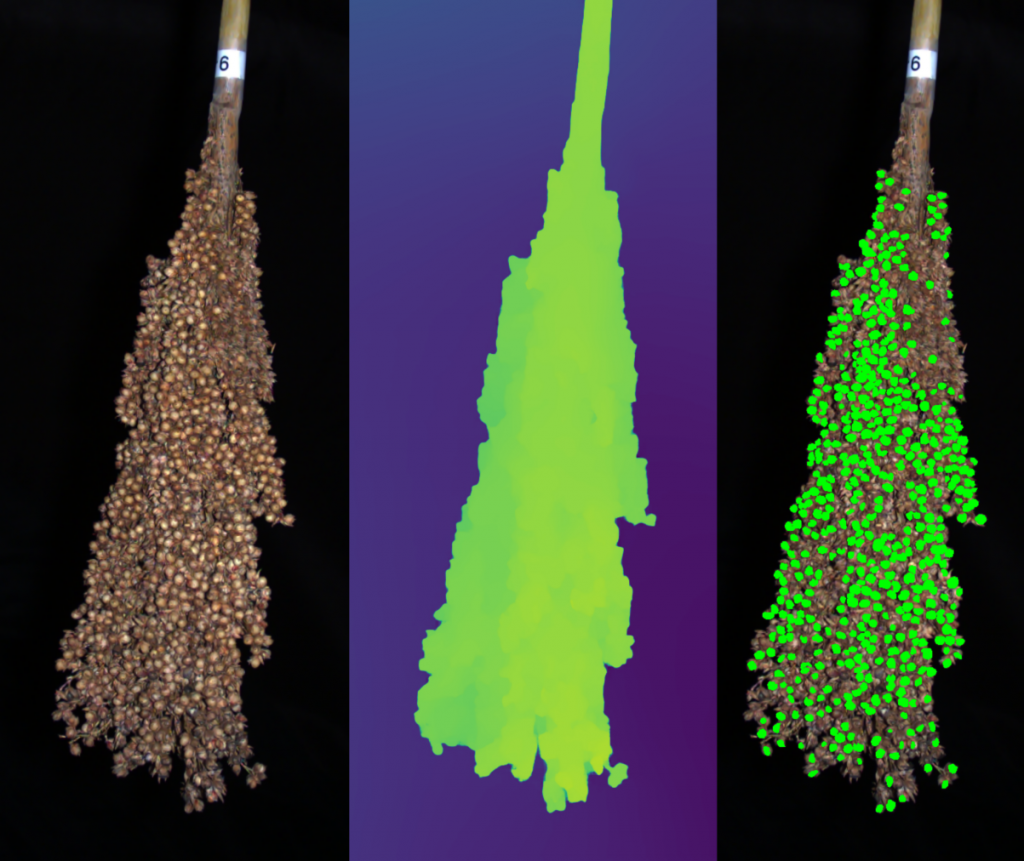

High-Resolution Stereo Scans of 100 Sorghum Panicles

This dataset contains stereo images, depth data, camera position/orientation, and camera information for 100 sorghum panicles (the seed-bearing head of the sorghum stalk), as well as semantic segmentation labels for a subset of the data. The 100 sampled sorghum stalks are drawn from 10 different species, in groups of 10. For image capture an illumination-invariant flash camera developed at CMU was swept around the panicle using a UR5 robotic arm, and approximately 150 image pairs were captured for each panicle, giving a full 3D view of each stalk. More information on the exact dataset contents can be found in the README.

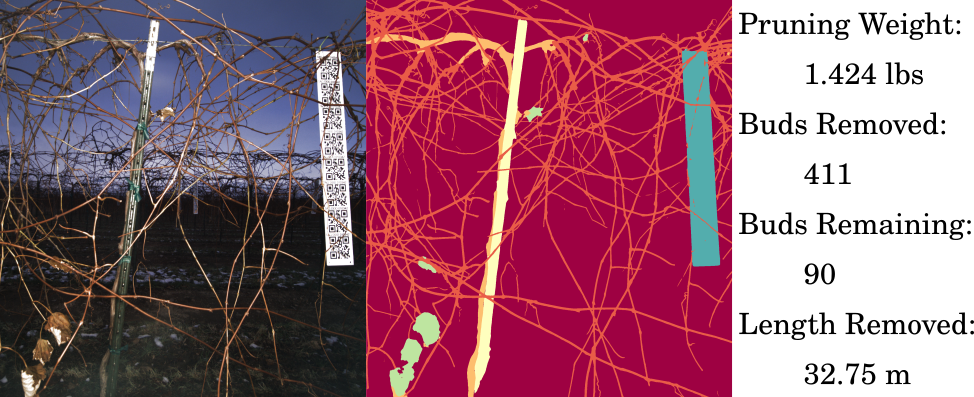

Stereo Data for 144 Winter Grapevines

This dataset contains stereo images, depth data, camera position/orientation, and camera information for 144 winter grapevines, as well as semantic segmentation labels for a subset of the data. The grapevines were captured before winter pruning, and represent a complex and organic structure. For image capture two actively-lit stereo cameras (one pair side-facing, one pair at a 45 degree tilt down) were driven along the grapevine row with a linear actuator, and stereo pairs were captured at 7 locations along each vine. More details can be found in the README.