Localization makes a best estimate of a robot’s position by fusing data from wheel encoders, inertial measurement unit, sun compass, and camera images (visual odometry). These four sources are fused into a best estimate by combining them with an Extended Kalman Filter (EKF). Unlike Earth, this is done for the Moon without GPS. The estimate is always imperfect (including for large, determinate rovers), but without special care it can be especially challenging for a small rover like MoonRanger since:

- Low-mounted cameras generate only limited feature sets for visual odometry

- Wheel slip on soft terrain leads to poor odometry estimates from wheel encoders.

- Sudden jerks when the rover moves over a rock and drop down due to the lack of suspensions are sharp position discontinuities that defy best filters.

Visual odometry uses camera images like the one seen below (orangey due to the IR filter on the camera) to track and estimate the translation of the rover. This uses a feature extractor to identify interesting points like rock edges and shadow corners in serial images. As the rover moves the rock edges and shadow corners appear to move in the images. The best fit of the relative motion of the points from image to image is calculated as the best fit of the motion of the camera (hence motion of the robot) between the images. This constitutes one localization estimate input for the Kalman filter.

We develop and test localization on our surrogate rover (Morphin). Morphin has a STIM300 IMU (Inertial Measurement Unit) which double integrates accelerations. The resulting estimates of translations and rotations constitute a second motion estimate input to the EKF. Wheel encoders are used in the classical skid-steer dead reckoning equation to generate a third estimate of differential motion for the EKF. A sun sensor reads the robot’s absolute bearing with respect to the moon’s lat-lon, and this constitutes the fourth sensor input to the EKF. The EKF combines the four to compute its composite best localization estimate.

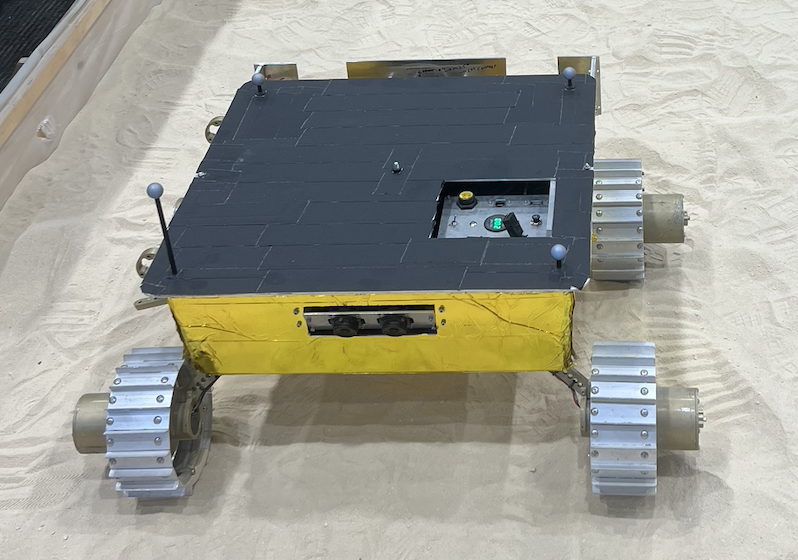

We test with the Morphin surrogate since the flight version of MoonRanger is still in development, and because that flight version can never be subjected to dirty testing in a terrestrial moonyard. Morphin’s weight, dimensions and wheel geometry differ from the flight version of MoonRanger, but it has enough fidelity for relevant algorithm development, coding and testing.

We test in a 24 x 24 foot “moonyard” in CMU’s Gates Hillman Center. The moonyard has a motion capture system (MoCap) that covers its central 16-by-16 feet area. The MoCap provides the actual position of the robot for comparison to the robot’s computed localization estimate.

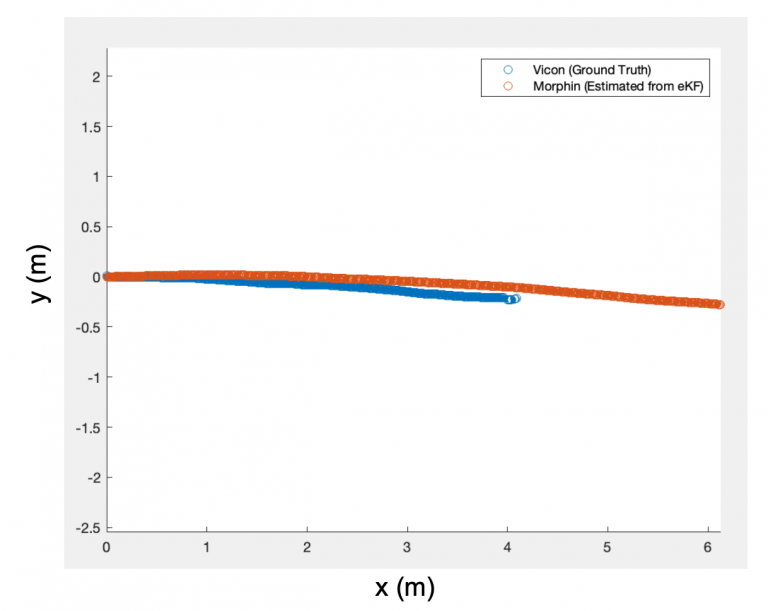

Seen in Figure 3a is a sample straight run, where the eKF predicted where Morphin is versus where it actually was. There exists a difference in the prediction because slip was not being compensated in the eKF. The team is currently working on a slip-compensated model, using VO to track the translation which would reduce the slippage problem in state estimation.

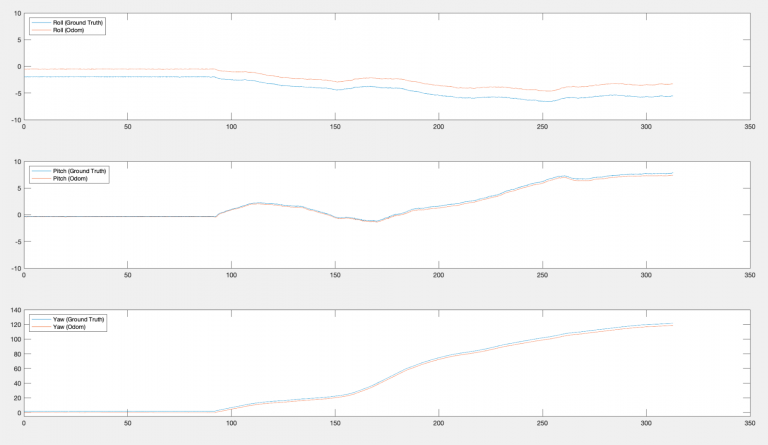

That said, the eKF effectively tracks the orientation of Morphin, as seen in Figure 3b. Especially for yaw (the heading of the rover), the ability to track the heading’s changes is important so that the rover knows its driving direction.

The rover-mobility team is ardently working on these challenging localisation problems.

Credits (rover mobility team): Benjamin Kolligs, Haidar Jamal, Varsha Kumar, Samuel Ong, Tushaar Jain, Alex Li, Vaibhav Shete

Technical Writer: Sarah Abrams